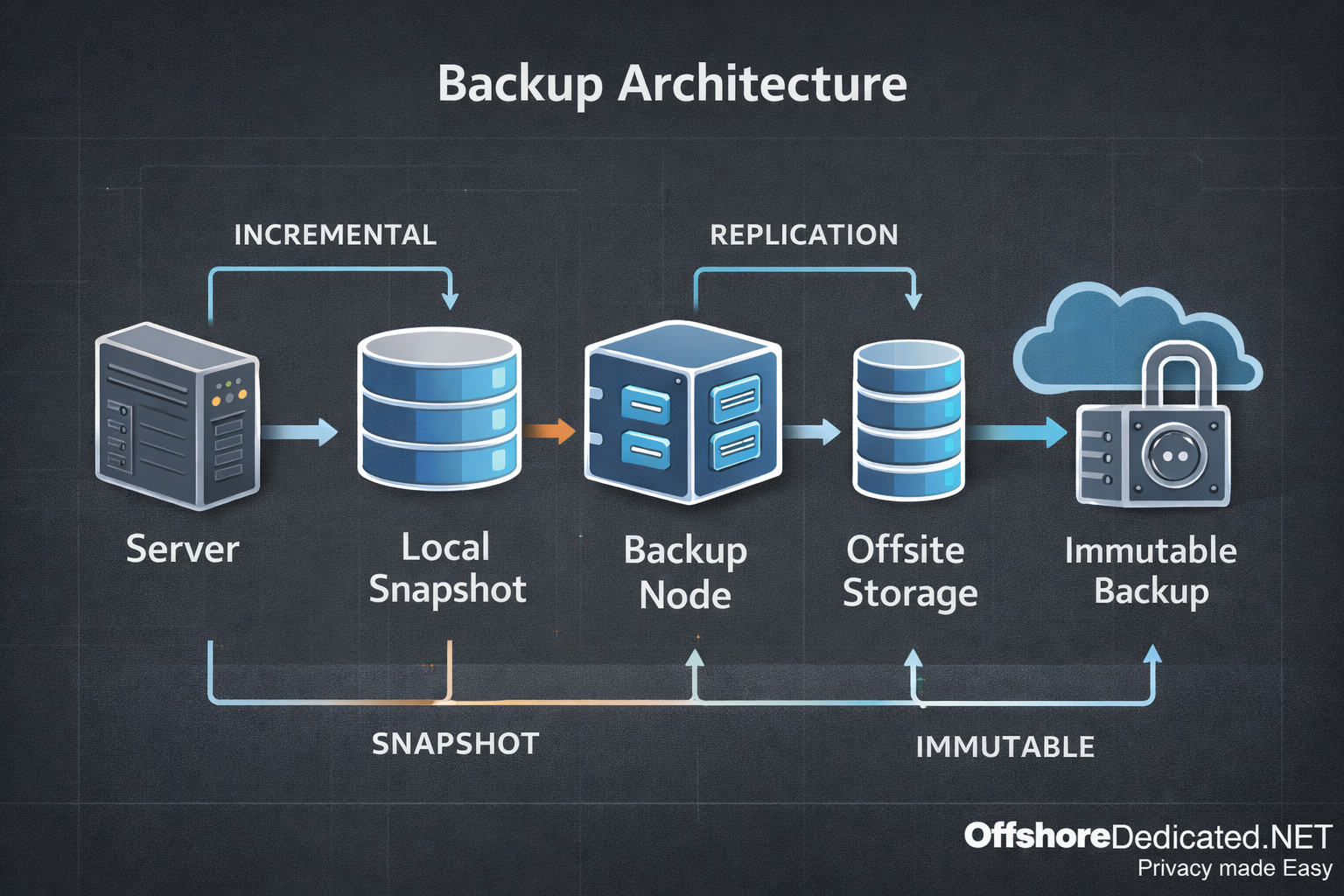

How Modern Backup Architectures Actually Work (Incremental, Snapshot, Offsite & Immutable Strategies)

[t Backups are not a feature. They are an architecture. In serious hosting environments — whether Shared Hosting, VPS, Cloud Servers, Dedicated, or Streaming Dedicated servers — backups must be